AI infrastructure at a modern applied university: a look behind the scenes

The topic of artificial intelligence (AI) has become increasingly important and relevant in recent years. The majority of the population now consciously uses AI systems in their everyday life, such as the chatbot system ChatGPT or voice assistants like Siri and Alexa. However, we are also increasingly interacting unconsciously with this advanced technology, for example through personalized product recommendations in online shopping or through recommendations of movies and shows on streaming platforms.

Most highly developed AI systems are implemented using artificial neural networks, which are computationally intensive in terms of calculation and especially training. In order to offer our students at the University of Applied Sciences Kaiserslautern the opportunity to deal intensively with the topic of AI and to continue their education, the Department of Computer Science and Microsystems Technology is now providing a powerful infrastructure for such projects.

The current concept for this was developed and implemented as part of Prof. Dr. Beggel's Master's course in Deep Learning.

Motivation

A problem arose during the lecture on deep learning: All course participants had desperately tried to get a free VM (Virtual Computer) with a GPU (Graphic Processing Unit) in the Google Cloud. These GPUs are needed to work efficiently with AI systems. Although credit was provided to the students, this did not help them without the availability of such a VM. Unfortunately, this would result in a lot of time being lost in the lectures.

Fortunately, our university has recently acquired powerful computers that have not yet been set up for teaching purposes. This project aimed to change that by making these resources available to students.

Our approach

To get an overview of what the system should actually be able to do, we spoke to those professors and employees at the University of Applied Sciences Kaiserslautern, who are either interested in AI servers or have already worked with the servers before. We spoke to seven professors and employees and got an impression of what would be required of our system. Almost all the professors and employees required the system to be able to carry out projects, Bachelor's and Master's thesis, and lectures. The requirements ranged from transfer projects and theses to automated product and marketing pipelines. There were also some more specific requirements for the services. For example, one professor needed automated containers for lectures, and another needed a system for Generative AI and an API for LLMs. There were also a few other ideas for how the servers could be used, including for cloud rendering (generating images or videos on a server instead of on the user's computer) or agent systems (autonomous AI units that are able to make their own decisions and react to circumstances).

Based on the requirements and wishes for the system, we came to the conclusion that several system types are necessary and that the servers must fulfill different tasks.

Three system types emerged, which cover the requirements and wishes of the professors and employees.

Service system

This system offers powerful Large Language Models (LLMs) and Stable Diffusion Models, which are accessible via APIs and an intuitive web interface. Members of the university can send requests to the service, whether they are connected to the university network or via VPN. Alternatively, they can use the service directly via the user-friendly web interface. The special feature: Users do not have to deal with the technical details of the server or configure it themselves. Everything runs automatically and simply.

Project system

The provisioning of individual workspaces with remote access is essential for the convenient support of students, employees and professors in their projects and lectures. At these workspaces, users should be able to train and apply their own AI models. This requires a project server, on which the computing power of the GPUs is distributed to the users. In this way, each person can use their own workspace to work efficiently and conveniently on AI projects.

Isolated system

To ensure the security and reliability of projects carried out in collaboration with external partners and companies, it is necessary to set up a system that can guarantee the highest security standards and constant performance. To this end, hard disks are encrypted and GPUs are assigned on a project-specific basis to enable reliable and efficient collaboration.

The servers

Our department has four servers specially optimized for artificial intelligence, providing a total of 12 A100 GPUs, 2 RTX 2090s, 216 processor cores and an impressive 1.6 TB of RAM. These machines, named Megatron, Skynet, Genisys and GLaDOS - inspired by artificial intelligence and robots from pop culture - form the foundation for our projects and the resulting services. Together, they enable our university to research innovative solutions and services in the field of AI and use them in teaching.

We use Debian 12 as the operating system on these servers. This is a Linux distribution that is widely used for servers in particular. This operating system is also supported by NVIDIA for the use of their GPUs.

Debian 12 also comes with the Python 3.11 scripting language, which is often used for modern AI applications, from creating a relatively simple neural network to complex LLMs such as ChatGPT.

In addition, isolated and premade applications and systems can be started under Debian using the Docker software. This makes it possible to use a premade development environment in software and AI development, which reduces the time it takes to complete a project.

But other projects with high computing power requirements are also well catered for, with support for common programming languages such as C/C++ or Java.

Our services and possibilities

To make these servers accessible to our students and staff, we offer a variety of services that enable a wide range of projects. To make these servers accessible to our students and staff, we provide a variety of services that enable a wide range of projects. From exercises in lectures to the use of LLMs for text generation to Bachelor or Master theses, everything can be realized on these servers.

Text generation

One of the services we offer is our API for Large Language Models (LLMs), which we have implemented with the Ollama software. This solution allows our users to implement large-scale projects with LLMs free of charge without the need for an expensive OpenAI or Anthropic account, which can be very costly depending on the use case. These advantages have already proven their worth in several projects at our university that would otherwise have to send massive amounts of data to a cloud provider. In addition, the operation of local LLMs enables the data protection-compliant processing of sensitive data that must not be transmitted to external cloud providers due to its confidentiality.

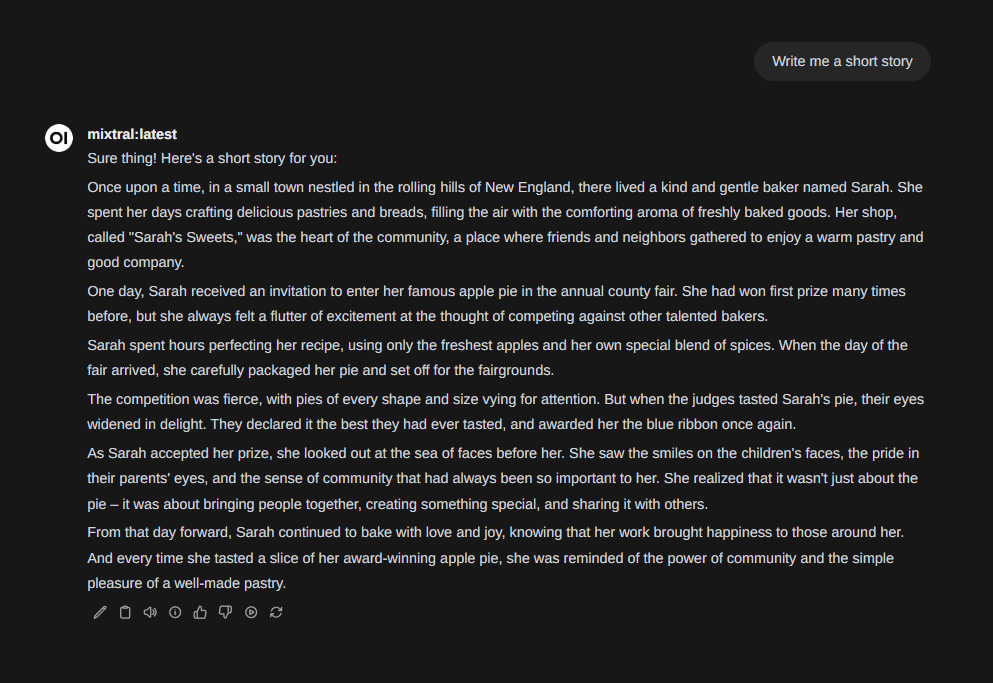

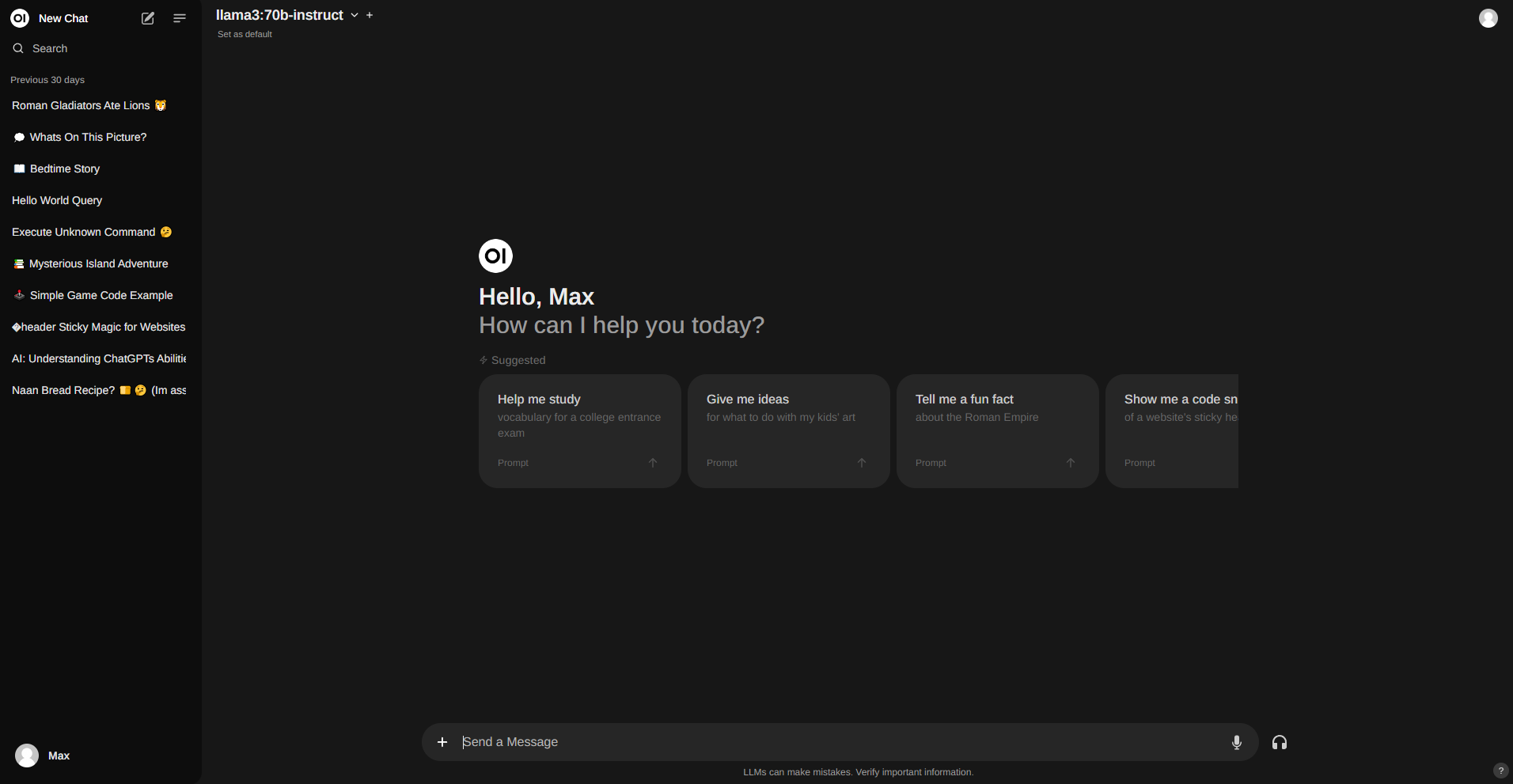

Our Large Language Models (LLMs) can be used in two ways: via an API that allows programs and other servers to use our service, or via a user-friendly web interface similar to the interface of OpenAI's ChatGPT. We have used the free software OpenWebUI, which was specially developed for interacting with LLMs. The web interface offers our users a wide range of options. They can start new chats with our LLMs and hold conversations, manage and track chat histories, and try out and compare different models. OpenWebUI offers further advantages for our administrators. We can add new models, unlock existing ones and customize them to provide the best possible experience for our users. In addition, the software allows us to easily administer and manage users. With this service, our students can use LLMs easily and intuitively and take full advantage of artificial intelligence at our university.

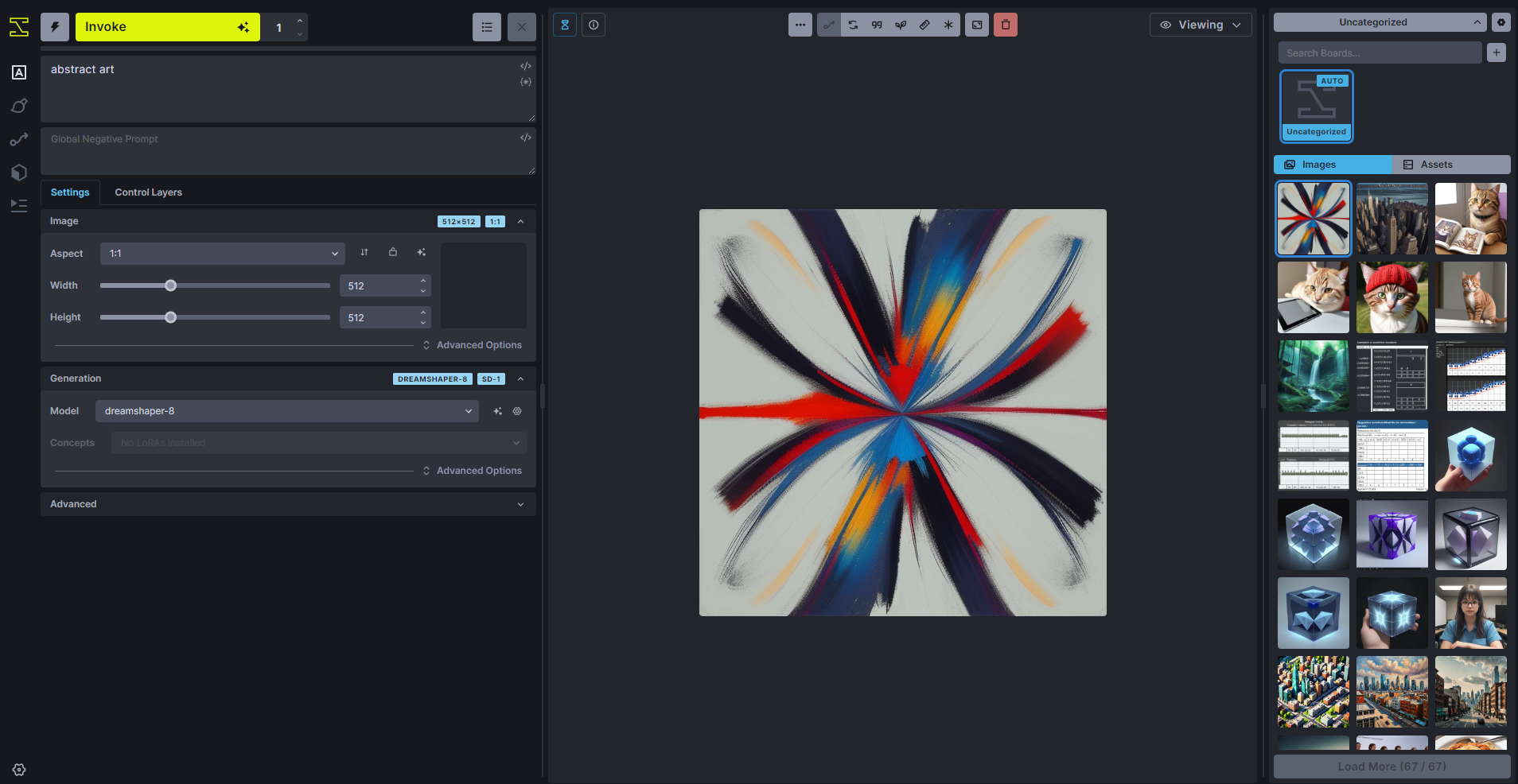

Image generation

Another service is the web-based interface InvokeAI, which makes it possible to use Stable Diffusion models to generate images. Similar to the Large Language Models web interface, this also offers a variety of customizable options for the request. By simply adding different models, selecting certain configurations - such as specifying that all elements should consist of spaghetti 🙂 - or setting the aspect ratio and image size, the user can customize their request. The interface also offers the option of changing certain areas in a previously generated or uploaded image or uploading a reference image. Users can also view and download their generated images.

Project systems

For students and professors who need a system on which they would like to use AIs for a project or their Bachelor's or Master's thesis, the above-mentioned project systems and isolated systems are available. Members of our Department of Computer Science and Microsystems Engineering can request remote access from the administrators to use these systems for their AI projects.

Try it out for yourself!

If you are interested in these topics, you can also test most of the systems yourself on a powerful notebook or gaming PC on a small scale.

LLMs

Our LLM service is realized with the Ollama software. This can be installed very easily on most computers using the installation program on the ollama.com website. However, it is operated via the command line of the operating system by default. For people who are not familiar with the console, the GPT4All software can alternatively be installed locally, which is also easy to use for laypeople and works on a similar principle.

For a complete system with WebUI and API, as we have installed on our servers, prior knowledge of the administration of Linux servers and Docker is required. In our case, the service is described via a Docker Compose file and executed using Docker. Here is an example of a Docker Compose file as it is used in our systems.

Image generation

We currently use InvokeAI to generate images. To install InvokeAI, basic knowledge in the administration of Linux or Windows servers is required, as the installation is carried out via the command line of the operating system. InvokeAI provides comprehensive instructions that are easy to implement for users with experience using the command line. An easy installation for laypeople is not available.

Want to find out more?

If you are a member of the university and are looking for information on how to get access to the services, you can find it in the Mattermost of the University of Applied Sciences Kaiserslautern in the channel "KI Services and Resources" under this link.

If you are from an external party and want some insight into how the department has set up and operates these servers, for example for setting up such a system yourself, send an email to Bastian Beggel.